The Architecture of Understanding: How “Attention” Redefined Intelligence

In the history of technology, there are moments that act as “hinge points”- single events that fundamentally alter everything that follows. In the world of Artificial Intelligence, that moment arrived in 2017 with the publication of a research paper titled “Attention Is All You Need.”

Before this paper, AI was struggling with a “memory” problem. After it, the world was introduced to the Transformer, the engine that now powers everything from ChatGPT to the predictive models we build at Meganous. To understand why your business can now “talk” to its data, you have to understand the shift from sequential processing to attentional processing.

The Pre-Transformer Era: The “Telephone Game” Problem

To appreciate the Transformer, we must look at what came before: Recurrent Neural Networks (RNNs).

Imagine trying to understand a 500-page legal contract by reading it one word at a time, from left to right, and trying to remember the first sentence by the time you reach the last page. RNNs processed data sequentially. This created two massive bottlenecks:

-

Forgetting the Context: By the time the AI reached the end of a long paragraph, it had “forgotten” the subject at the beginning. This made it impossible to understand complex relationships in data.

-

Slow Processing: Because it worked word-by-word, it couldn’t be “parallelized.” You couldn’t use 100 computer chips to work on 100 parts of the sentence at once; you had to wait for the first chip to finish the first word.

The Breakthrough: “Attention” as a Spotlight

The 2017 paper proposed a radical idea: Forget the sequence. Use Attention.

Instead of reading left to right, the Transformer looks at the entire dataset simultaneously. It uses a mechanism called Self-Attention to decide which parts of the data are most relevant to the current task.

Think of it as a “Digital Spotlight.” When a Transformer reads the sentence, “The bank was closed because the river overflowed,” the “Attention” mechanism instantly links the word “bank” to “river.” It ignores the “the” and “was” and shines its spotlight on the relationship between the water and the land.

This changed everything for three reasons:

-

Infinite Context: The AI can now “see” the relationship between a data point on page 1 and a data point on page 1,000 instantly.

-

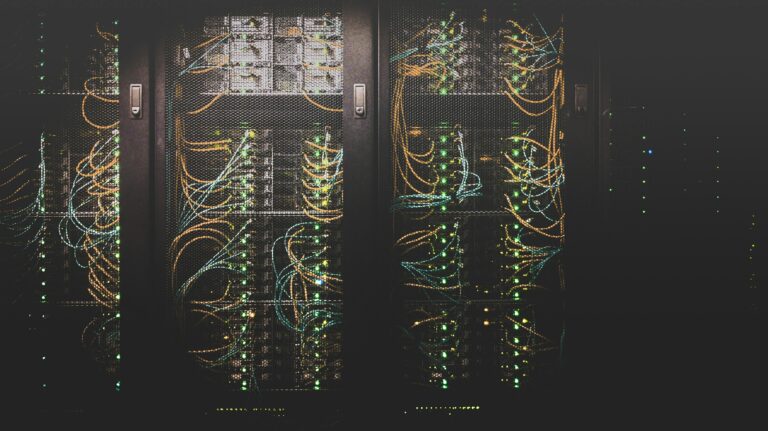

Massive Speed: Because the model looks at everything at once, we can use massive clusters of GPUs to process data in parallel. This is why AI went from simple “auto-correct” to “writing entire essays” in just a few years.

-

Computational Discipline: It allowed us to train much larger models on much larger datasets without the math “breaking” under its own weight.

Why This Matters for the Modern Enterprise

At Meganous, we don’t just admire the Transformer for its academic brilliance; we use it as the foundation for Predictive Parsimony. The “Attention” discovery allowed us to move away from noisy, bulky data toward lean, high-speed intelligence.

1. From Translation to Prediction

While the original paper focused on language translation, the “Attention” logic applies to any sequence- including your sales history, supply chain movements, or sensor data. We use Transformer-based architectures to help businesses predict market shifts by “attending” to the specific variables that actually drive revenue, rather than getting lost in the noise of millions of irrelevant data points.

2. Powering the Agentic Framework

The Transformer is the “engine,” and our Agentic Architecture is the “vehicle.” Because Transformers can understand context so well, we can build specialized agents that master specific business domains. One agent “attends” to your legal compliance, while another “attends” to your inventory math, both utilizing the same core logic of the Transformer to remain accurate and fast.

3. Efficiency and ROI

By using “Attention” mechanisms, we can build models that require lower computational power than older, clunkier systems. We can train a model to be a “specialist” in your specific industry by showing it only the most relevant data, ensuring a faster and more efficient workflow for your team.

The Meganous Philosophy: Harnessing the Spotlight

The “Attention Is All You Need” paper proved that intelligence isn’t about knowing everything- it’s about knowing what to ignore.

We apply this same philosophy to your business. We don’t just throw “more AI” at your problems. We use End-to-End Pipelining to ensure the “spotlight” of the Transformer is shining on clean, high-value data. We build the warehouses, the pipelines, and the models so that when you ask a question, the “Attention” of your system is focused exactly where it needs to be.

Conclusion: The New Standard

The landscape of AI changed in 2017 because we stopped trying to make machines “read” and started making them “understand.” The Transformer is the most powerful tool ever created for finding patterns in chaos.

Whether you are looking to automate your customer interactions or predict your next five years of growth, the legacy of the Transformer is what makes it possible. At Meganous, we turn that academic legacy into your competitive advantage.